Stored XSS vulnerability in image alt attribute to steal cookies (Bug Bounty)

I recently found a stored XSS vulnerability through an image alt attribute. While I can’t share any details about where, I can share details about how I found it, so let’s take a look at that in this article.

What caught my attention

I was going through this web application with the intention of finding bugs, but I was very early on in the process and simply trying to get a feel for how the application worked.

While doing that, I do still like to focus on one or two vulnerability types to think in terms of where I could test for vulnerabilities. My main go-to is XSS, so as I work through the application’s flow, I like to throw in XSS payloads in user input fields or other areas and then keep an eye out for anything interesting that happens as I then progress through the rest of the application’s flow.

A lot of times, nothing comes of it. But sometimes, something interesting happens and we can take a closer look.

The latter happened this time around, because I started to notice that some of my XSS payloads were being handled differently in different parts of the application, and in different parts of the same web pages, but in similar contexts.

For example, I might see the full payload outputted as regular text in the top left corner of the web page (say for a page title), but then part of the payload would get stripped out in another section of the same page. Except, when I checked the context of where those payloads were being displayed, they were identical.

XSS Context: the location where attacker-controlled data appears. ie: HTML context, attribute context, JavaScript context, URL context.

So you might have something like this on the webpage:

<h1><img src=1 onerror=alert(1)<h1>Where the <img src=1 onerror=alert(1) was properly being displayed as text instead of creating an HTML element.

But, further on the page, the same data appeared like this:

<div><(1)<div>There, the img src=1 onerror=alert is getting stripped out.

Both of those are being displayed in the same context: between HTML tags. But they’re not being handled the same way by the application. This inconsistency made me curious to keep checking other contexts and other areas I could inject XSS payloads, to see if I could cause even more weird behavior.

Breaking out of an HTML attribute

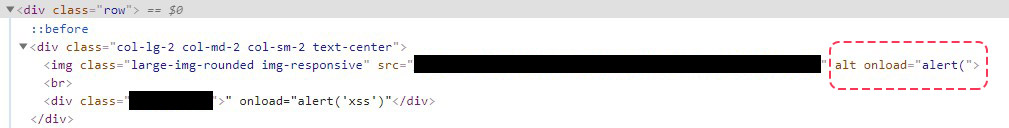

After about an hour, I noticed that one of my payloads was escaping out of an HTML alt attribute. I was navigating to a page and I again noticed that two areas were handling the payload differently. To see if anything was going on, I right-clicked on one of the neutralized payloads and inspected the element.

As soon as my DevTools opened up, my eye caught a weird looking alt attribute.

My payload was being added to the alt attribute of an image on the page, which wasn’t visible until I looked at the source code. Except for this time my payload was closing the alt attribute of the image and creating a separate onload attribute.

At this point, the hunt is on. That’s a really good sign for a bounty hunter, and a really bad sign for the app, because it means that my input is able to break out of context and I’m able to create new HTML attributes, etc… They aren’t handling preparing user input for this HTML attribute context properly, which is making it vulnerable.

Finding a successful payload

Now that I found an entry point, it was only a matter of finding a successful payload that would do something somewhat useful in order to demonstrate impact.

Some of my payloads I threw at it were getting stripped. For example:

Payload: "></div><a href=javaSCRIPT:alert(/XSS/)>XSS</a>

Result: <a href="javaSCRIPT&colon;alert(/XSS/)">XSS</a>

Payload: "></div><a href=javaSCRIPT:alert(/XSS/)>XSS</a>

Result: <a>XSS</a>

Payload: "/><svg/on<script>load=prompt(document.cookie);>"/>

Result: "/>load=prompt(document.cookie);>"Code language: PHP (php)So there was clearly some type of filtering going on, but definitely not enough. In the end, all it took was:

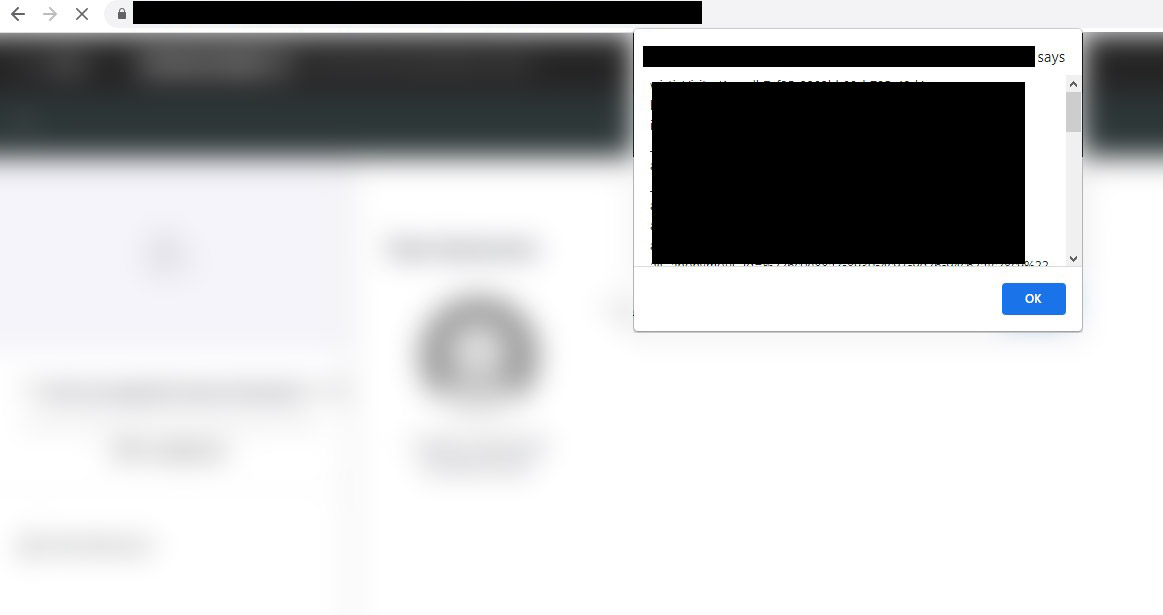

'" onload="window.alert?.(document.cookie)"The Impact

The impact of this basic attack is potentially quite high. One reason for that is because once published, this would have been a public page crawlable by search engines and linkable to anyone. This means I could potentially steal every visitor’s cookies without having to craft any special URL, just by having someone visit the page either organically or by linking them to it.

Obviously this payload just pops an alert box which would inform the visitor that something is wrong and would do nothing useful for an attacker, but different payloads could be crafted to be discrete and, again, to potentially send these cookies over to a controlled server, or attempt other types of exploits.

One good thing the developers of this app did was add character count limitations to this specific field which would make crafting a more useful payload quite a bit more annoying than if they hadn’t. But, given the situation, that would only slow someone down and probably not prevent them completely. I say that because if I found an XSS vulnerability there after just one hour of playing around, chances are high that I’ll find others somewhere else. Those others may not have the same character limits, or I might be able to chain them together.

In all, something like this definitely needs to get fixed.

How could this vulnerability be prevented and fixed?

I’ll write some blog posts on this later to go into more detail, but for now, I’ll leave you with a self-plug: my XSS course has a section dedicated to explaining how to properly neutralize XSS payloads depending on contexts and other factors in order to defend your apps against this serious threat.

I hope you enjoyed this write-up and I’ll see you in the next one!

Nice but what i don’t understand is would be classified as “Self-XSS” because a while back i attempted to modify the console and added a mouse event. After i filed the report, come to found out i got flagged for thinking this was reflected, but it was “Self-XSS” which meant nothing to them. So how could i avoid this problem i have been having and improve my chances ?

Hey Anthony, if you are making modifications in the browser’s console, that is self-XSS because you’re attacking yourself. That’s why self-XSS usually requires some sort of social engineering. For example, you might go to a user and tell them “hey, run this code in your console” and that code may send you that user’s authentication tokens, so it is still an attack that can have an impact, but it’s out of the web app developer’s control since it’s not going to be caused by a vulnerability in the web app itself. The console is a browser feature that only lets you make local modifications. The reason the bug I found is considered stored XSS is because I managed to store the payload in the application’s database, and unsuspecting visitors would trigger the XSS by simply navigating to the web page that extracts the payload from the database and renders it on the page. That *is* an application vulnerability because the application’s code is allowing malicious user input to get rendered by the browser.

Wow, interesting brings a whole new perspective also what are your thoughts on “Exotic Payloads” do these add any real value to XSS hunting for the real world to bypass those annoying WAFs ?

I think the term “exotic xss payloads” was created to make it sound fancier than it really is. A payload is a payload. Some payloads do different things than others, but not entirely sure what makes one more ‘exotic’ than another 🙂

I was just curious because i remember you told me basic payloads just wont work these days companies large or small will pretty much filter out the common ones out there.